JunoMind: Designing an Interface for Multi-Agent AI Collaboration

A UI system for managing distributed AI decision-making at scale

/agentic systems/

/interface design

/agentic systems/

/interface design

As AI agents become more autonomous and specialized, users will need more than a chatbot interface — they’ll need tools for coordinating, monitoring, and reasoning across multiple agents working in parallel.

This conceptual project builds on the JunoMind platform, imagining a UI designed for managing teams of AI agents with different roles, skills, and goals. The focus was on real-time visibility, transparent agent behavior, and inter-agent coordination, presented in a layout that supports clarity and control.

Design a scalable interface for overseeing multiple AI agents working on collaborative or concurrent tasks. As the sole designer, I explored interface patterns for distributed decision-making, conflict resolution, and traceability — creating a system where human users can effectively manage, guide, or intervene in AI workflows.

Traditional UX for AI often assumes a one-to-one model (user + assistant). In multi-agent systems, that breaks down. The user now needs to:

The challenge was to design a UI that supports multi-agent complexity without overwhelming the user — especially in high-volume, real-time environments like operations, research, or decision support.

I began by defining three core interface requirements:

The user must understand the high-level state of the entire agent network at a glance — statuses, active tasks, and alerts.

Each agent must be inspectable: task history, reasoning, confidence levels, and current state must be accessible with minimal clicks.

The user should be able to assign tasks, review outputs, resolve conflicts, and monitor inter-agent dialogue without switching contexts.

These principles informed a modular interface structure with strong visual hierarchy and focused affordances for each layer of complexity.

I designed the system around three primary panels:

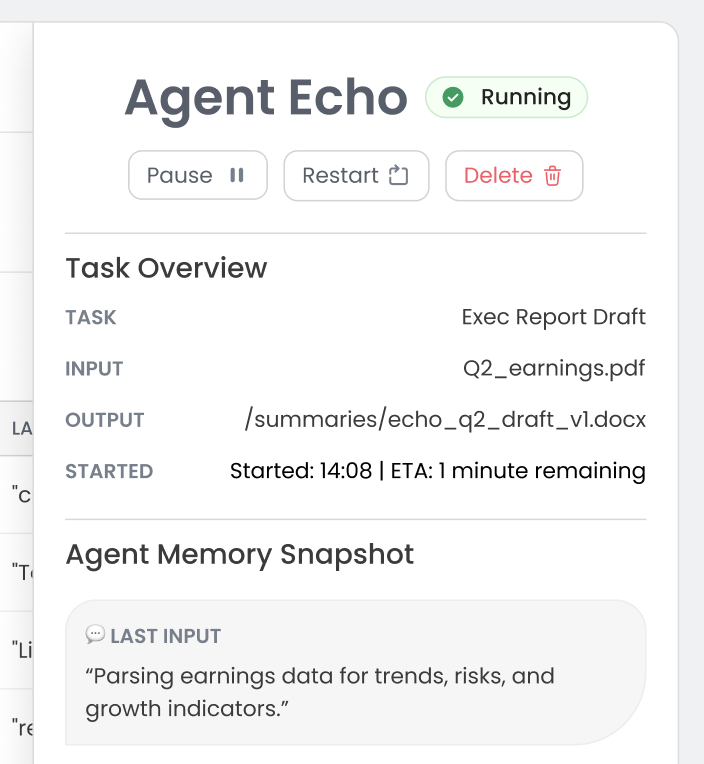

Agent Overview Panel

A dashboard view showing all active agents, with extra agent-specific details displayed in overlay cards, including real-time status indicators (Idle, Processing, Requesting Input, Error). Cards display current task, output confidence, and any outstanding dependencies.

Task View

A task-based view showing how a task has been handed off, split, or modified across agents. This view highlights:

Control Centre

A command surface for issuing new tasks, assigning agents, prioritising outputs, or stepping in to override behaviour. Includes system logs and confirmation flows for critical actions.

All views update in real time and support contextual filters for debugging or task tracking.

The "All Tasks" view gives users oversight about ongoing tasks, agent reasoning within tasks and any conflicts between agents working on the same tasks.

Solution: Used collapsible agent cards, consistent iconography, and progressive disclosure for low-level logs or explanations.

Solution: Created a structured conflict resolution format with the option efficient decision-making from the user when necessary.

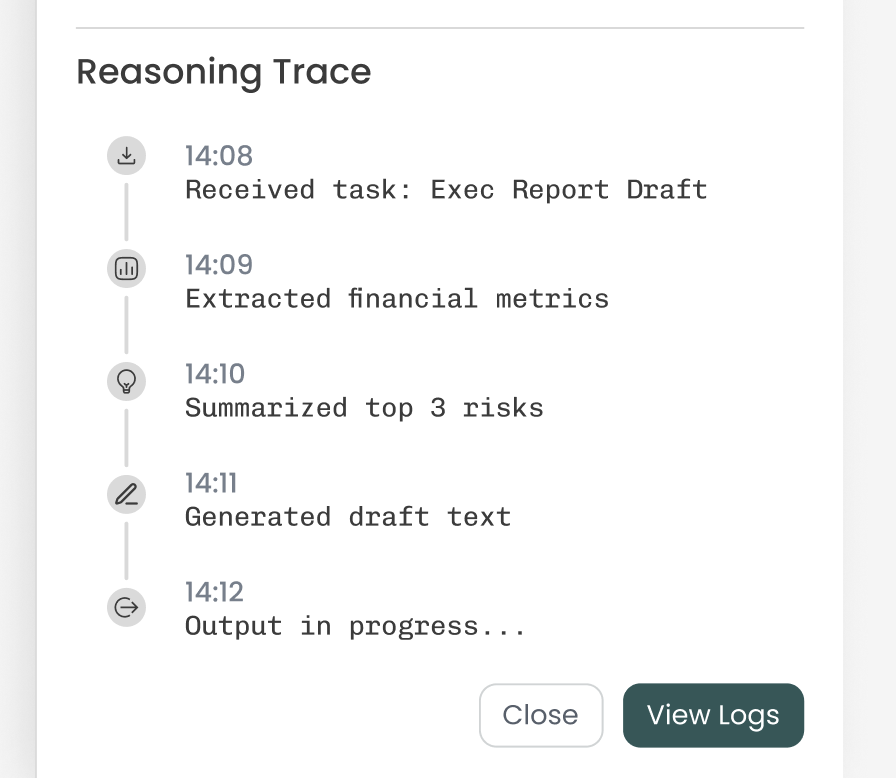

Solution: Agent outputs include reasoning traces in plain language, paired with visual confidence scales and step-based histories.

Overlay detail cards reduced cognitive overload and gave users quick access to agent information or actions

The conflict display in the task card overlay allows users to quickly view conflicts, inspect reasoning traces and choose preferred output or escalate the conflict to a human reviewer.

Reasoning traces could be hidden from default from UI to avoid information overload, but easily accessed for users who want a deeper understanding of agent decision-making.

Card-based layout enables quick scanning and access to agent states, tasks, and alerts.

Users can trace how a problem moved across agents and where intervention occurred.

Automatically surfaces divergent outputs between agents, prompting user review.

Support for predefined agent archetypes (e.g. “Researcher,” “Critic,” “Synthesizer”) with editable behavior constraints.

This project demonstrates how UI design can support emerging patterns in AI-human collaboration, especially as large-scale agent ecosystems become viable.

Use cases include:

The system balances high visibility with low friction, enabling users to guide intelligent systems without being overwhelmed by autonomy or scale.

This project required shifting away from traditional assistant-based UX models and toward multi-entity coordination. Designing not just for the user, but also for the system’s internal interactions, helped me think about interface design as a structural logic — not just a surface.

Users need clarity, not control panels. The UI must support smart defaults, while giving users paths to intervene confidently.

When designing for systems with emergent behaviour, transparency and hierarchy are key — especially under real-time conditions.

Designing this interface meant understanding not just how users interact, but how agents interact with each other — and what visibility humans need into that system.